Answer

Apr 22, 2025 - 01:12 PM

When people ask us if AI is going to destroy content creation and the value of content creation, it's a very interesting question and we're trying to be honest and open minded on where we see things going so that our customers can invest wisely into the future. Based on what we know so far, we think human beings and their experiences being shared through content are going to have a place for a very very long time. The reason is, AI can only feed on the data it can get it's "fingers" on...and then that is what it "knows" that it can spit out. But the fact is, it only has access to a tiny fraction of what has been captured and is saved digitally....and that is only a fraction of the data that humans experience every day and rely on to make good decisions. It's an incredible amount of data...but both Google and the LLMs (Large Language Models) rely on people to capture and communicate the 'first to market' experiences which AI then feeds on, tries to determine the trustworthiness of, and then starts to spit that out as potential "good information" that you can rely on.

See this video that captures this point, where Yann LeCun (VP & Chief AI Scientist at Meta - aka Facebook) communicates that a 4 year old child has seen 50x more information than the biggest LLMs that we have.

That's a HUGE amount of information that AI does not have...and that's just a four year old child and what they consume through their eyes.

Let's Run Some Additional Numbers Based on That Premise (You can skip if you don't want to geek out on this point)

If a 4 year old child, just through the data they receive through their vision (20MB per second) has 50x more data than the "largest LLMs (Large Language Models) that we have", let's expand that out to the data that all of the people in the world gets per year through their unique experiences, decisions, locations, etc.....compared to the largest LLMs (again, just through their vision). If you take the "50x more over 4 years".....that means that it's 12.5x yearly (50x divided by 4 years). So that means that every year each person on the planet, in their waking hours....consumes 12.5x more data just through their eyes than the largest LLM (when he created that video).

Now let's multiply that 12.5x by all the people who are taking in that data per year, currently the population of the world is around 8 billion people who are all living unique lives, putting themselves in unique situations, seeing and experiencing different things at different times in history. If you multiply the 12.5x larger than the largest LLMs" by the experiences of the entire population of the world.....it comes out to people in the world have take in 96,000,000,000x the amount of data per year in comparison to the biggest LLMs on the market. Again, just through their vision.

AI & LLMs are Great, But Notably Lacking in "Real World" Experience and Data

If the above is anywhere near where things are at with AI and LLMs (assuming that the VP & Chief AI Scientist at Meta - aka Facebook knows anything close to what he's talking about), people in the world consume 96,000,000,000x the amount of data per year in comparison to the biggest LLMs on the market. That's quite a difference....and that's just NEW data that it's taking in and it's ONLY vision. Now factor in all of the other sensory systems that real people have and then all of the years of experience they have. Bottom line.....AI and LLMs are great but they only have access to a tiny fraction of real human experience and so they are severely lacking in taking into account all of the variables that human beings take into account. The reason this is important is because human beings are searching for data and solutions because it will have an impact on them....and they are a real human being as well.

An Interesting Twist, Most New Content May be AI-Generated or Translated

Keep in mind that LLMs continue to get smarter by getting access to new data. The issue is....much of the content being produced and published now is AI generated or automated (or semi-automated) translations of knowledge that has already been shared. So, it's not unique information...it's just a new form of what is already out there. Forbes's published a reference to a study from Amazon where they communicate that "roughly 57% of all web-based text has been AI generated or translated through an AI algorithm" (see article). Think about that....over half of the content published may be AI-generated. That is what the LLMs are going to try to access in order to get "smarter" and more knowledgable? It's going to need to filter through all that regurgitated information and identify (and incentivize the creation of) "real" new and unique experiences (Google's doing this through their "information gain score").

What does this mean? AI is great...but humans have a big place in the future of content creation and publishing. People need it, and interestingly.....AI needs it.

AI Can't Have The "Full Picture" without Real People for a Long, Long Time (if ever)

We like to equate LLMs and AI to the "guy in the bar" from the movie Good Will Hunting, where the guy is very well read and is able to effectively regurgitate and recite incredibly impressive sounding content....and to the layperson he knows what he talks about and is super impressive. But, the lesson of that clip in the movie is that even though you sound super eloquent and have a lot of knowledge...that doesn't mean that you're able to rely on what comes out of it's mouth and lean on the fact that it's drawing the right conclusions. The fact is, it can sound super smart and intelligent...but it's very limited in the conclusions that it can draw because it's only producing based on what it's read/seen...and that is just a fraction of the picture.

Great movie and great scene (see the clip here)....and great lesson. AI knows a lot, but it shouldn't speak so confidently because it's only digested a very small fraction of all the variables to consider. It doesn't have the full picture.

If we can jump to another point made in the movie that hits the point quite well....it's when Sean turns the concept back on Will and communicates "You don't have the faintest idea what you're talkin' about." because he is lacking real experience on the topics and is relying way too much on things that have been written by somebody else which is just a tiny fraction of what needs to be considered....

The character Will is super knowledgeable, and more knowledgeable than most other people who society would consider the cream of the crop (the guy in the bar....and for our purposes, think AI and LLMs)....but without life experience it's impossible to get the full picture that's needed many times to make informed decisions in real life (another great clip, worth the watch here). Especially when it comes to consequential decisions. There is a reason why Google has s special category for "YMYL". YMYL stands for "Your Money or Your Life" and refers to content that Google prioritizes for its high quality and potential impact on users' happiness, health, financial stability, and safety. When you're counting on content to help you determine consequential things...you're going to want the full picture to make a decision. The more consequential the decision, the more you need the full picture.

The fact is...AI will always need to feed on the data it can get it's fingers on...and it can only get it's fingers on it if the data is accessible to it. That amount of data it has access to will continue to increase as more content is shared, Tesla's cameras and microphones on every car start to build models off that data, if Ray-Ban & Meta's Smart Glasses" ever become mainstream and collect and save the data it reviews, or Tesla's Optimus robots start entering the home and walking around....but these things are a very long way off from mass adoption to collect and process anywhere near the amount of data that humans do.

The amount of data and experience within the human brain currently and that it will continue to consume, filter through, and spit out conclusions based on that lifetime of experiences has a huge place in the world moving forward. There will always be new products, new experiences, in new locations at unique periods of time in history....and that data is gold for human beings and AI. There will always be a "first to market" when sharing those experiences and that is what Google is rewarding through it's "information gain score" as it's able to recognize who is providing that new value and creates the incentive for the creators of that content through rankings. For businesses, that means that if you can channel the expertise of your employees into great content, that can lift the visibility of your brand through the information and the rankings of your products and services.

You and the people within your organization are bringing a huge amount to the table, and both Google and AI needs what you have, you need to leverage that. Now...AI does have an awesome place in this picture....through efficiency.

AI is Super Efficient in Processing Data

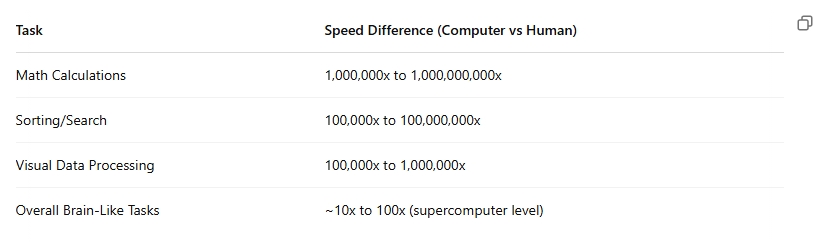

The data that AI and computers do get their hands on, they can process that data much faster than humans can. You find different data on this depending on the details of what you're looking for...but the overall feedback on this is a computer is many multiples faster at processing data. Given this is the case, we'll just let you know what AI told us this looks like so you have a high level (let's assume it's somewhere in the ballpark of reality).

So....for the data that AI does have access to, it can process it and use it MUCH faster than human beings can. To give you an idea....humans can output content at a pace of 10 bits per second...."roughly the pace of typing or conversation" (see reference). What this means is that AI can do a lot of the legwork in the processing of a huge amount of data (the data that it has access to) and speed up the process of creating of valuable/original content.....in order to make that 10 bits of human output provide the most value in the most efficient manner. The way that this plays out in Answerbase is we have an "Enhance with AI" capability where our customers employees can answer a question with their original expertise....and then AI can surround that "original content and insight gold" with it does well as far as making that readable in context of answering the question that was asked, grammatically correct, and in a pleasant customer service tone. Our customers can use their 10 bits of output that is their unique knowledge...and then surround that with what AI brings to the table so the person doesn't need to worry about a large percentage of what they would be considering if they weren't able to leverage AI.

Just keep in mind, when it comes to the root of the knowledge being shared.....AI needs the data to process...and it's only getting a fraction of the data that humans are experiencing, so in order to generate content that is valuable to humans, there is a huge amount of data that it doesn't have it's "fingers" on in order to produce valuable content that is relevant to the human experience.

Use AI for Efficiency

As noted above...what computing power and LLMs are great for is efficiency. We see we can speed up ecommerce helpful content optimization processes by 12x to 48x what customers can do without Answerbase....so it's a huge benefit. But....humans still play a huge role in ensuring that the end product is valuable for your customers. AI should be used for efficiency, not relied on to "know what it's talking about" all the time.

Bottom Line - Google and AI Both Need and Need to Reward Human Beings

AI models are great, and they'll continue to get better....but they're only as powerful as the information they have access to and their limited in how they "experience" life because they are limited in what they have access to and also they're not filtering it through the conclusions we draw on the data because it's not touching, feeling, smelling, trying, failing, trying again, succeeding, etc. Every person has years of experience of data that they're not only consuming, but filtering through their previous experiences to draw conclusions on in order to inform future behaviour. AI is amazing, and it's going to continue to be/get amazing moving forward....but people have a place in the content creation game for a long time as they communicate their experiences and conclusions in valuable content that is not only valuable to other people..but continuously necessary for AI to be valuable in the future. Google has created the incentives for that original expertise and experience to be shared, and AI or any other search engine will need to if it wants to survive and thrive in the future.